Predicting the toxicity of drugs without animal testing

- 23 juil. 2018

- 6 min de lecture

A mini-brain has been created from reprogrammed human skin cells. It allows studying brain development as well as pharmacological and toxicological effects of new drugs. Combined with machine learning algorithms – some of which are able to predict toxicities with a 90 % accuracy –, this strategy has become a credible alternative to animal testing.

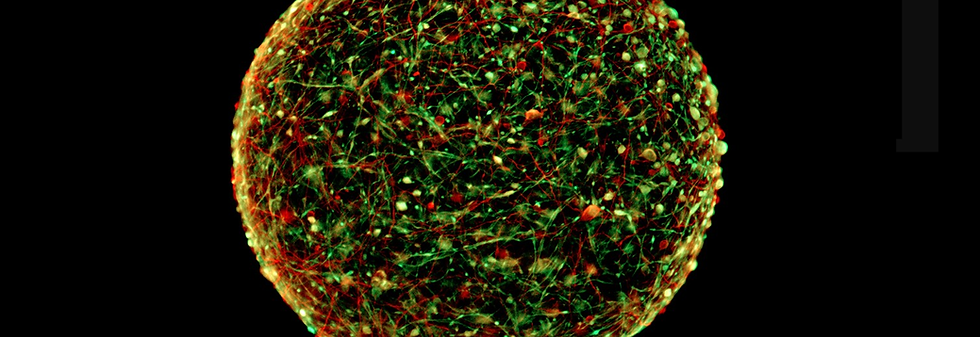

In this mini-brain less than 0.5 mm in diameter, created by the team of Thomas Hartung, we distinguish,

in red, the undifferentiated cells and, in green, the mature neurons. Photo © Thomas Hartung / Johns Hopkins.

The French scientist and philosopher Henri Poincaré (1854 – 1912) famously stated “Science is built of facts the way a house is built of bricks: but an accumulation of facts is no more science than a pile of bricks is a house” (1). A lot of our science has gone into this direction – we study the bricks, the cells and molecules, but forget about the house, the organism. This deconstructive approach might have been necessary, but too often we could not see the forest for the trees, the house for the bricks. This might be of no worry for publishing the next paper, but if we have to conclude for a new drug to go first into humans, a chemical to go into a product and on the market, we better know that we can trust our verdict. A lot of lives might be at stake.

For this reason, safety sciences have been very reluctant in integrating new approaches. Quite incredibly, the most important test approaches – animal tests – were designed 50 to 80 years ago. No other field in science relies to such extent on “prehistoric” methods. But this is coming to limits: animal tests are costly, labor-intensive and cannot be automated. The safety assessment process of a new pesticide amounts to $20 million in funding, more than five years of testing, and we need about 20kg of the substance, to dose all the animals over years. All this is usually not possible for chemicals other than pesticides and drugs, as product life cycles and profit margins do not allow such expenses.

The solution is what we have recently named the 3S approach: the use of Systematic reviews and experimental Systems toxicology approaches for Systemic toxicology. Systemic toxicology describes the long-term effects of substances on the entire organ system, the chronic toxicities, cancer and the toxicity to reproduction and the developing offspring. These are the dangers to the house, to stay within Poincaré’s illustrative picture. The three new technologies are Systematic Reviews and the experimental and computational Systems Toxicology.

Systematic reviews are a different way of handling existing information; it means to evaluate and condense the literature in an objective way, in the spirit of evidence-based Medicine. By defining transparent questions, criteria for the inclusion and exclusion, evaluation of the quality and strength of evidence as well as meta-analysis of the retrieved studies, a most comprehensive and objective view on the existing knowledge is achieved. This tells us what to trust and what to replace.

Experimental Systems Toxicology refers to the organoids, the organ-on-chip test systems which are currently mushrooming. Fueled by stem cell technologies and bioengineering, more and more organ and multiple organ models emerge, which reflect aspects of organ architecture, organ functionality and organ interactions. For example, our group at Johns-Hopkins University in Baltimore (Maryland) has developed mini-brains from reprogrammed human skin cells. This reprogramming makes cells embryonic – they can again develop into all the different cell types of the human body. More and more protocols how to make them brain, heart or liver, etc. become available.

In our case, skin fibroblasts were transfected (*) to obtain immortalized induced pluripotent stem cells, a method published first in 2006 and awarded a Nobel prize already in 2012 because of its groundbreaking impact on the life sciences. These cells can be cultured for many cell passages or frozen and stored, giving rise to enormous possible cell masses.

HIGHLY REPRODUCIBLE

With specific protocols adding signaling molecules, neuroprecursor cells, the stem cells of the brain, can be produced. At this stage, cells are scraped of the culture dish and the cell suspension is further cultured on a shaker, keeping them in permanent movement. This forces them to become perfectly round balls of cells, where cell density and shaking velocity determine their diameter.

The big advantage of this culture is their reproducibility in size and cell composition. Our mini-brains show the different neurons of the brain as well as their helper cells (astrocytes and oligodendrocytes important for the proper functioning of the brain), which together form the central nervous system. And these just visible organoids reproduce parts of brain’s architecture and functionality: the neurons connect and start communicating, forming electrical networks; the oligodendrocytes myelinate the axons and the astrocytes pamper and protect the neurons. That is what is meant by microphysiological – they represent physiology on a small scale. They are small houses not just bricks. Very different to traditional cell cultures, where cells lie in their plastic dishes like eggs in a pan “sunny side up”. These organoids are 1,000 times denser allowing all the cell-cell-contacts, which make an organ.

At the same time, highly reproducible composition and size of the mini-brains allow studying brain development as well as pharmacological and toxicological effects. These organoids can be made from different donors, including various patient groups, especially with genetic defects. They can be infected with viruses, bacteria and other pathogens. They can be grafted with brain tumors to study their response to chemotherapy. The limit is the sky.

In parallel to such advances in bioengineering, the informatics revolution provides us with means for modeling, integration of test results and even prediction of toxic properties directly based on the chemical structure. This Computational Systems Toxicology encompasses the modelling of organs, organ development, organ responses, up to virtual patients. On the level of bacteria this is already possible, allowing to model the entire metabolic network of certain bacteria. For example, a paper in 2012 proposed a whole-cell model for Mycoplasma genitalium including all cell components and their interactions. Models of a virtual embryo or a virtual liver are on the way. The ultimate vision is a virtual patient, an avatar of a human, which allows to do virtual treatments for a typical human or, in the future, of a given individual. We might imagine, we start with medicine’s famous 70kg man and then adapt our avatar to be female, 120kg and diabetic.

TWO DAYS OF CALCULATION

Big Data and machine learning (*) support these developments. Larger and larger databases are formed in toxicology and elsewhere. We have been interested in using similarity of chemical structure to predict toxicity. The concept is simple: similar structure, similar property. The problem is that chemists have synthesized more than 70 million structures – this means more than 30 trillion pairs (one-to-one comparisons) are possible. It took the super computers of the Amazon cloud two days to calculate for us a map, where similar chemicals are close to each other. For about 20,000 we could find animal test data. Now we can find for any chemical its place in the map and see what we know about its neighbors. Those who are close to the “bad guys” are likely to be bad themselves – those in neighborhoods of the good guys very much less so. A revolutionary approach to estimating the risks of chemicals.

We could recently show that this method is as good or better than the reproducibility of the animal test. This means as good as you can get, because a golden rule of bioinformatics is “trash in, trash out”. The preliminary results predicting all known toxicities of these 20,000 chemicals are very exciting. The computer is accurate in more than 90% of the cases. However, until this has been independently verified, this has to be taken with a grain of salt. When these ongoing validation studies are passed, this would form the basis for an assessment on par with current animal test. Then, this would allow adding the new Systems Toxicology approaches on top to really improve for the safety of consumers. Perhaps even better than a brick house, but from modern components and with a modern design.

(1) Henri Poincaré, La Science et L’Hypothèse, 1908.

(*) Transfection is the process of introducing, without the use of a virus as a vector, foreign genetic material into eukaryotic cells.

(**) Machine learning is a method used to conceive, analyse, develop and implement algorithms that will enable machines (in a broad sense) to evolve through a systematic process and thus to complete more and more difficult tasks.

> AUTHOR

Thomas Hartung

Medical Doctor and Toxicologist

Thomas Hartung is a medical doctor and tocigologist at Bloomberg School of Public Health, Johns-Hopkins University (Baltimore, USA). Former head of the European Centre for the Validation of Alternative Methods (2002-2008), Thomas Hartung’s work focuses on the development of new assessment approaches in toxicity testing, based on genomics.

Commentaires